Loopy Pro: Create music, your way.

What is Loopy Pro? — Loopy Pro is a powerful, flexible, and intuitive live looper, sampler, clip launcher and DAW for iPhone and iPad. At its core, it allows you to record and layer sounds in real-time to create complex musical arrangements. But it doesn’t stop there—Loopy Pro offers advanced tools to customize your workflow, build dynamic performance setups, and create a seamless connection between instruments, effects, and external gear.

Use it for live looping, sequencing, arranging, mixing, and much more. Whether you're a live performer, a producer, or just experimenting with sound, Loopy Pro helps you take control of your creative process.

Download on the App StoreLoopy Pro is your all-in-one musical toolkit. Try it for free today.

24 bit mastering on iOS, what sources?

The user and all related content has been deleted.

Comments

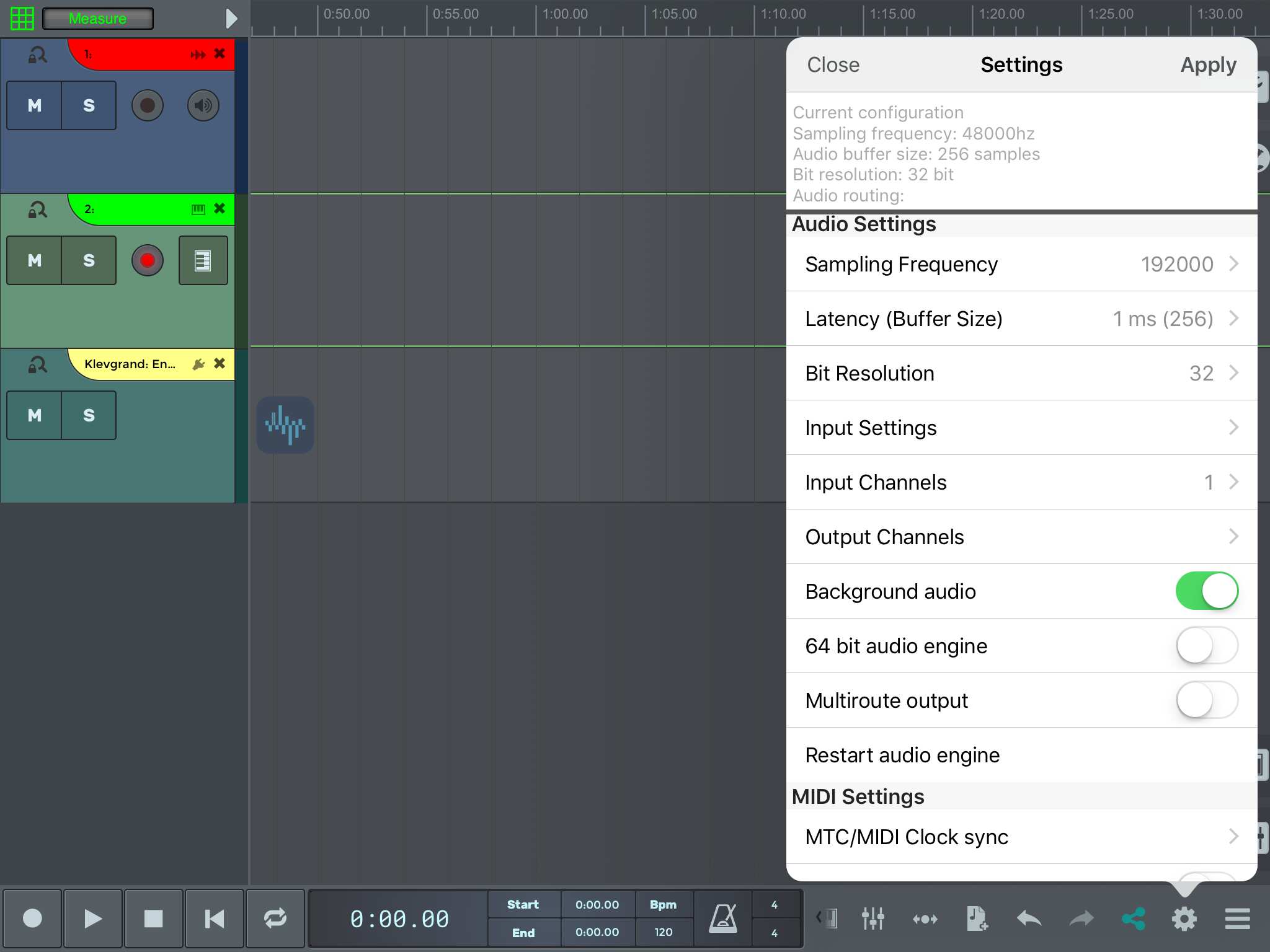

Just throw in that Audio Evolution Mobile will do 24 bit up to 192 Hz Wav or M4a.

Ntrack studio

Gadget can export 24-bit at various sample rates if you choose to export as ableton live session, you can then extract the needed audio segments to use where wver you desire to do so...

You really don't need 24bit synth sources unless you do extremely subtle stuff with lots of parts near absolute silence. Digitally synthesized sounds are noisefree by nature.

24bit matters a lot in microphone recording, specially if the stuff is dynamically processed later - and large orchestral recordings with a lot of channels (where noise may add up).

The standard use of 24bit files today is more a matter of convenience, probably introduced by early versions of Pro Tools that needed projects with identical bit depth (either 16 or 24 bit), not mixed. Today all DAWs don't have that restriction (afaik).

Things don't sound 'better' in 24bit, just lack the quantization noise/harshness by converter quantization in the range of -80 to -90db.

16bit covers a (theoretical) range of 96dB, which is huge. Contemporarily mastered stuff has less than 6dB headroom... you couldn't even detect the those 24bit sources.

Do the 'Live Export' from Gadget to DropBox and download the *.wav files as needed

I was a bit surprised that AudioShare was not an option for exporting a Live Session...

Hopefully Korg will add 'Open In...' for sharing stuff from Gadget.

This would make it super easy to Airdrop stuff between iOS devices and also send the files to any app that accepts the files.

To my knowledge the Gadget developers do keep an eye on the Korg Gadget Forums but seldom reply there.

the 16 bit input is automatically aligned by the mix engine (as various 24 to 32bit formats are) to whatever internal precision the engine uses (most operate beyond 32bit).

There's no gain in headroom, if things are properly implemented

Of course you may assume that some 24bit stuff is produced more carefully and some 16 bit data sets may have lower quality due to their date of production.

But the opposite may apply as well, it's not a fixed rule.

As long as it all sounds good I don't really care about the number of bits in use.

For those interested BM3 will do all calculations using 32-bit floats...

>

Internally most apps use 32 or 64 bit float.

depends on content: it's a tough task to digitize precisely beyond bit #20, which already requires sophisticated hardware/clock and a flawless powersupply.

There are reports that those 'spoilt bits' in fact sum up significantly in todays loudness oriented productions.

Which btw frequently suffer from serious THD figures that wash out all additional 'precision' anyway.

You may know Fabfilter Pro-L, here's a performance comparison of 3 such 'smart limiters'.

(a software supposed to increase perceived loudness without influencing it's sound)

http://www.sawstudiouser.com/forums/showthread.php?17682-The-levelizer-is-still-brilliant

That about 'analog' sources, digitally generated tones depend on algorithms.

Unfortunately it's often not the most precise calculation that 'sounds' greatest, but simply the proper one.

According to Christoph Kemper (Designer of the Access Virus synth and the Kemper Profiling Amp) the choice of a specific algorithm largely depends on experience and even intuition of the developer. It's NOT plain math

ps regarding your math examples: no sampled value is ever reproduced. None of them.

Those 'samples' are (iirc) coefficients of a reproduction FUNCTION.

I fully support working at 24bits or higher while writing music, it's a great safety net. At the same time, I will give you my entire mastering studio if you can hear a difference between 24bit and 16bit audio files.

You should try, definitely good to attempt this sort of thing for one's self and not take internet comments at face value.

It's all gonna end up as crappy YouTube or SoundCloud audio played through shitty earbuds tho.

It's no problem doing so... just don't overlook cool 16bit sources

I can tell 16 from 24 bits quite easily in decays into silence on an acoustic recording, but never even tried this on synths.

Those spectral screenshots are quite interesting in a mastering context.

The 3rd harmonic in Pro-L is 50db higher then Levelizers -92dB while Anwida's L1V spectrum is so clean, one could assume a fake. Incredible.

Pro-L is quite well received as mentioned in the article, yet introduces a significant amount of unwanted distortion (afaik about uneven harmonics).

There are certainly a lot of 'mastering plugins' with lower capabilities that do way more harm to a mix than a lower bit depth.

I constantly use that Levelizer for final loudness as my only 'mastering tool'.

If a lot of gain reduction without smearing and harshness is required, I simply repeat the process a couple of times in smaller steps instead of one big hop (which L1V could handle).

I keep Levelizer for it's superior interface as you see the point where the limiter starts to be engaged as a line on top of the waveform. Generally it's set a bit above the dense part of the image as it's a specialized peak treatment (without peaks it's use is pointless).

From since I know that plugin, I almost never used a compressor anymore for loudness.

For Levelizer is a SAW-Studio specific plugin, I usually suggest Anwida's L1V as a general purpose VST. For loud masters that keep a clean soundprint it's like a magic wand.

It would of course be cool, if some developer would implement something similiar in IOS.

Don't forget the small iaps are a monthly subscription whereas the one that's over 30 has all the features but no subscription.

Nothing wrong with this, but just to add another consideration: iOS devices are still resource constrained compared to similar PC/Mac setups (in terms of storage, raw processing power, RAM limits). Are the (debatable) advantages of 24 bits over 16 bits worth the potential extra resource load on the system?

I've been wondering this - if I'm sending audio over the IDAM to Mac - that connection is 2 Channel 24 bit 48khz. In the WWDC intro of IDAM they say "You don't need to modify your OS X application to take advantage of this feature. If your iOS application already properly adapts to a two-channel 24-bit 48-kilohertz audio stream format, you don't have to modify your iOS application." But so much is confusing still - from what I read, Core Audio on OS translates any incoming audio to 32 bit floating point LPCM - then translates it again on the Output side, to whatever format the endpoint(s) expect. It's challenging to set up a proper overall rate, or decide which is best. It would seem to me that 32 bit Floating point LPCM would be ideal as it would require no translation by Core Audio. There's also the .CAF format, which seems to have its advantages, but I don't see it in any iOS apps.

In general I wish we end-users had more available info on buffers, conversions and the like. For example, I've got a deent understanding of how Audio is processed on OS as it enters/crosses the Hardware Abstraction Layer, but it's much more difficult to understand the input/output buffers inside Core Audio, some are opaque, and many vary by developers' call structures and the like.

I record everything in Live @ 96khz and 32 bit. I've been reading and experimenting with intermodulations, ambi/vibrasonics, and while I haven't made any final decisions - it's not that you can hear a difference, it's what the difference imparts - and of course, there is a difference, irrespective of its being audible. Different Drummer and holistic tonality are akin.

My focus on intermodulations etc., has made me a bit obsessive about Latency/Jitter/Buffers and the various transports between PC/Mac/iOS,. I also look at learning the protocols and audio structures as learning the instrument - so too you can compose, rather than relying on the generative mysteries of the lighting-to-USB Connector.

I ultimately moved to ethernet audio/MIDI setup. I think the Deterministic Networking group of the IEEE working on TSN (Time-Sensitive-Networking), Avb's successor, is where it's at for Audio, and since the protocol has been picked up in automotive and industrial, it's got weight. Motu's newest LPD interfaces have it implemented, while their 624/828 they released just back in October are labeled as "AVB" rather than TSN - it's a fast moving protocol. But as someone mentioned above, currency leakage - there's too many audio adulterants to bit rate and the like, and while each may be sonically irrelevent on its own, we are definitely still on the side of inferior, and in need of better resources, documentation, and protocols - I mean, I know there's reason to be down on the new MBP's - but with 2 TB3 ports you've got 40 gigs of throughput! Should be enough!

I just don't see how USB Audio ever gets past its inherent limitations - isochronous audio on USB 3.0 can allocate up to 90% of the 5Gbps pipe, and the traffic is isolated from other USB traffic, yet still time code inconsistencies, and the error rate of cyclical redundancy checking (about 1 for every k packets) eventually result in buffer over/underflows and we get the crackles we all know and love.

Apologies for meandering

How do we enter the "Win Tarekith's Entire Mastering Studio contest?"

I get the feeling Apple wants to keep it as transparent as possible so we don't have to know. And I guess the only way to really know these things is to measure it, since processor-load is heavily dependent on the CPU/FPU that's used and the way conversions are handled by the system layers and the apps. E.g. on some processors you can move between integer and floating point at hardly any penalty, whereas on others it costs a lot of cycles to do so. I always used to think that fixed point arithmetic was faster than floating point, but this too is not as black and white as it used to be. etc. etc.

Pretty hardcore for a hobby!