Loopy Pro: Create music, your way.

What is Loopy Pro? — Loopy Pro is a powerful, flexible, and intuitive live looper, sampler, clip launcher and DAW for iPhone and iPad. At its core, it allows you to record and layer sounds in real-time to create complex musical arrangements. But it doesn’t stop there—Loopy Pro offers advanced tools to customize your workflow, build dynamic performance setups, and create a seamless connection between instruments, effects, and external gear.

Use it for live looping, sequencing, arranging, mixing, and much more. Whether you're a live performer, a producer, or just experimenting with sound, Loopy Pro helps you take control of your creative process.

Download on the App StoreLoopy Pro is your all-in-one musical toolkit. Try it for free today.

Comments

Can you try disabling "Split by markers" in the MIDI import settings?

From what I understand, Helium is a pianoroll and controller editor only, it doesn't have arrangement features. So while Helium is probably a good choice for "noodling", Xequence is more geared towards arranging full songs.

That's true! Although I'd say that both Xequence and Helium are objectively "professional" tools. Just with different feature sets 🙂

Yes, Xequence should handle thousands of clips with tens of thousands of events each without issues.

Let me know if disabling the above option helps.

Well, to start, the Atom doesn’t provide editing of anything but notes. It also isn’t able to accurately record and playback Animoog Z performances.

As far as I know, MIDI Tape Recorder (great recorder/player that has no editing ) is the only highly reliable AUv3 for capturing and playing back MPE.

MPE also needs very accurate timing. To accurately play back. Until MTR nothing on iOS eas accurate enough in the timing to capture and play back correctly , including apps that do multi-channel recording.

Many ios midi sequencers are not very high resolution in their timing.

That's my experience. Atom 2 can't loop mpe performances accurately. Midi Tape Recorder is my current go-to for recording mpe

MTR is open source. You can take a look and see what I does.

It doesn’t have the issue you mention of quantizing to buffer cycles.

unfortunately, MTR has no editing. The issue is that there is no AUv3 midi recorder and editor with that precision.

It might be broken again. This was discussed in this thread

https://forum.audiob.us/discussion/46251/let-s-talk-about-midi-sequencer-timing#latest

Live events cannot be scheduled in the current buffer because that buffer has already been dispatched (is already on its way to the output / next processor / etc.). So they always have to be scheduled in the next buffer, that's why the timestamp will always be zero (as early as possible, because they're already too late anyway).

jason

AUMs keyboard and all the AUV3 keyboard and pad app i have tested (*) produce notes with zero sample offset timings. In the thread i only tested MIDI Sequencers. If you record something like Rozeta X0X, you‘ll notice the sample offsets.

The test method was mentioned quite early in the thread, and i also published the AUM session i am using. With this session and a sample buffer setting of 2048, a missing sample offset is also audible. But just looking at the MIDI monitor is usually enough.

(*) AUM’s keyboard, Xequence key, Xequence Pads, KB-1, Velocity KB, Mononoke Pads, the keyboard and chord pads integrated in LK, Tonality ChordPads, ChordPadX

Thanks for the explanation - i already suspected that there is a technical reason none of the keyboard apps issue a sample offset.

Yes. It's not even a programming thing, it's more of a generic spacetime thing. 😉 when a live event arrives, what's currently being output is the previous buffer you prepared, and that cannot be modified anymore. So, your earliest chance for outputting is 0 in the next buffer.

I think I get what you mean -- you essentially want to introduce additional latency in order to eliminate jitter? i.e. make the delay between the input event and the output constant? Interesting. I guess input apps could have both modes as an option. Though I guess in most situations, minimal latency is more important than jitter for live input.

Missing sample offsets from a sequencer are quite audible for percussive sounds. At a buffersize of 2048 and 120bpm it can be as be as bad as 1/64th (*). And then there‘s also the problem of drift - which started the whole investigation in the „lets talk about sequencer timing“ thread.

(*) 48000 samples per second sample rate and 2048 buffer size results in 23.4 msec/buffer - or a max offset of 23.4ms if sample-offset is always zero. 120bpm means 2 secs for 1 bar, 62.5ms for 1/32 and 31.25 for 1/64

It's an interesting point, maybe @j_liljedahl also wants to drop a short comment.

Fortunately I'm not designing the realtime control system for the Space Shuttle (I did design realtime control systems for industrial applications, but timing fortunately wasn't that critical in that case 😎)

(I did design realtime control systems for industrial applications, but timing fortunately wasn't that critical in that case 😎)

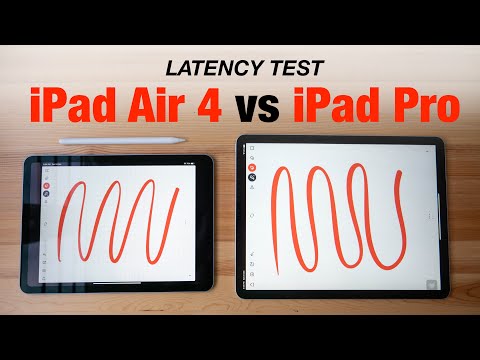

I suspect one of the reasons on-screen keyboards don't send any sample clocks is due to the speed at which the touch interactions are collected and reported by the OS. 60Hz for regular iPads, 120Hz for Pros unless screen refresh is capped to 60Hz or 240Hz when using the Apple Pencil...

I didn‘t check that yet, but i will do so for my two midi keyoafds that i own. I assume these will get the offset fromthe Ios system reporting the midi input.

No-one want‘s to record sequencers, thats correct.

But we want sequencers that are tight and don‘t have drift. We found out that zero sample-offset is one part of problem, as this is audible (around 1/64 at 48khz/2048) for drums. The sequencers that implement sample-offset rarely show drift in our experience, maybe that‘s a side effect of the devs having a closer look at their code.

To check Atom/Helium or other sequencers, just input notes by tapping into their UI, set 2048 buffersize and have a look at the Midi in the Midi-Montor. BUT to check MitiTapeRecorder that does not offer editing or note input via UI, one obviously needs to supply an input with sample offset to check if that is recorded and replayed. And since keyboard don‘t send sample-offset and MTR test requires to record one of the sample-offset producing sequencers.

In my understanding it isn‘t the sample offset thats needed, but the order of events recorded needs to be exactly the same when recording and later playing back the MPE for Animoog/Animoog Z. Its a ‚dance‘ of midi CCs (74, 11 and AT) some before and some after the note-on is issued.

Atom 2 records mulichannel midi and controller data, so in theory it could play back the midi that Animoog sends - but that doesn‘t work if IIRC - but if you want i can check. And one could have a look at the initial midi stream of animoog vs the replayed stream from Atom 2.

There's a connection there at least...

I don't remember the exact name of the WWDC session where response time was discussed in detail.

There's a difference when using the Apple Pencil on a 60Hz device compared to 120Hz device.

I just checked recording a single MPE note of Animoog Z in Atom 2:

In my test, Animoog Zs onscreen keyboards sends the note-on and note-offs with sample-offset zero, some of the aftertouch interaction gets sample-offset, but most of them also use zero, the pitch bend reset nefore the note-on has a sample offset of zero.

Midi Monitor of input note:

The recorded version has an identical order of the events - but all the CCs get a sample-offst timestamp before the note-on… maybe Animoog-Z interprets that offset when processing its input midi buffer and re-orders them.

Midi Monitor of Atom 2 playing back the recorded events:

I also can‘t explain the ‚System‘ messages logged.

If I recall, one of the issues is that Animoog Z makes use of portamento events that trip up some sequencers. @SevenSystems got it working. He can fill us in about the things he did to get it working.

There is no CC data in that loh> @espiegel123 said:

Yes, Animoog Z was a bitch to get fully working, especially because Xequence converts the recorded MIDI into an internal, completely different representation, and then back to MIDI on output, and all this has to be fully transparent. (i.e. the task is much more difficult than with something like MIDI Tape Recorder).

Here's a page from another thread where this whole thing got fixed:

https://forum.audiob.us/discussion/48946/xequence-2-3-public-beta-mpe/p2

Yes, that should be the case, but it's often difficult in practice. If you have a pianoroll editor with a ton of features, for example, you just cannot work with the raw MIDI data, it would increase the development effort by an order of magnitude. So you have to convert the incoming MIDI data to some internal representation that is easier to work with, and then back to MIDI on playback.

The difficult part is to keep this whole process transparent so that playback creates exactly the same MIDI stream as what was recorded, even though the data went through two transformations.

That's what I tried to do in Xequence, and I think with reasonable success. But I'm biased 😄

You did a great job!

Yes that's true... I've looked at all of that and actually added comprehensive (N)RPN support to Xequence mostly so that MPE works (well, some synths, especially older hardware, still use (N)RPNs instead of CCs for a lot of stuff so it's a bonus anyway!).

I agree that the implementations differ a lot, partly because the MPE specification is either inaccurate or a bit loose ("you can do it either way")... that's why Xequence has a bazillion settings for MPE recording, editing, and playback (settings! The stuff you hate! 😜)

.

I can confirm that the on-screen keyboard in AUM does not provide any sample offsets, because it's really mostly meant for testing out stuff. Also it's non-trivial to fix, because the MIDI timestamps are sample offsets within the current audio buffer (in AUM internally and in AUv3 in general) which is accessed in the audio thread, while the on-screen keyboard is handled in the separate UI thread, so one would need to put the note events in a lock-free queue, figure out the minimum delay needed to dispatch them in the next (but not the current) audio buffer, and convert their UI event timestamps into sample offsets within that audio buffer.

Oh, yes this is also an issue of course.

There’s actually no point in trying to use the UI timestamps. At 60 Hz that would give the same jitter as using no timestamps and a buffer size of 735 samples at 44.1kHz sample rate.