Loopy Pro: Create music, your way.

What is Loopy Pro? — Loopy Pro is a powerful, flexible, and intuitive live looper, sampler, clip launcher and DAW for iPhone and iPad. At its core, it allows you to record and layer sounds in real-time to create complex musical arrangements. But it doesn’t stop there—Loopy Pro offers advanced tools to customize your workflow, build dynamic performance setups, and create a seamless connection between instruments, effects, and external gear.

Use it for live looping, sequencing, arranging, mixing, and much more. Whether you're a live performer, a producer, or just experimenting with sound, Loopy Pro helps you take control of your creative process.

Download on the App StoreLoopy Pro is your all-in-one musical toolkit. Try it for free today.

Comments

Just use Collider and lock it to a Scale and tweak your note generation, and point it to an instrument in AUM.

Minute 7:54:

Aaaannd finally, unrelated to my previous two posts:

Can anyone recommend what the best workflow method of recording/tweaking/playing back many channels of midi at the same time? (16, to be precise)

Logically it felt like one instance per channel was the right way to go, but the amount of repetitive tweaking (which feels lovely to do in one instance) and re-arming of record that I'm having to do just while testing on channel 1 makes me feel like flicking through 16 AU instances to adjust the same parameter/record area/arm to record (etc.) is not going to be a very quick flow.

I'd also like to say thanks for the most amazing piece of software! Opens a lot of doors with what I'm trying to do at the moment.

but all these apps are ideal for generative music. drambo, mozaic and rozeta especially. aum is kinda ideologically close to generative music.

Very true. These apps allow one to step into a state of flow quite quickly

These apps allow one to step into a state of flow quite quickly

I really dislike the modular idea. I love the concept of DAW they fit my way of working don’t wanna plug anything.

Mozaic is stay away because I am a programmer as my day job. If I buy Mozaic is a rabbit hole I am going to fall into for the next 12 months.

I just wanna make music, no programming, no plugging millions of cable and definitively no dozen hours trying to understand Drambo.

Thank you that is very much along the line of what I want. 😀👍 And nothing new needed to buy.

Definitely need to play about with this methodology!

@ecou Wise words ... staying away from Mozaic. I’m a dev by day and lost myself for a year (literally) in Mozaic. Still trying to pull myself out to make some music.

I’m the opposite with Mozaic. It’s stopped me wasting time looking for obscure MIDI workarounds or elusive apps to solve obtuse workflow problems — these days I just go for the most direct possible solution with Mozaic (because you know it’ll be possible!) and hack through to the other side, coming away with a reusable new function that solves a specific problem.

Thanks for your word of warning. 😎👍 Knowing when to stay away from something is half the battle.

Thankfully I'm a good guy and won't even mention here that Atom 2 has full scripting ability. That would just be mean.

@wim I’m almost finished making my Mozaic scripts fully integrated to Launchpad but I’ve been thinking about adding in an Atom launcher. Just spent 30mins friggin’ around trying to learn how to launch patterns with another instance of Atom before realising my issue was that I hadnt upgraded my version of Atom..... gawd! I need a looooong break.

I did see that but I figured I might be able to stay away since it is not the main focus of the app unlike Mozaic.

I need one of these threads for Drambo!

Indeed, thanks for bringing this up! I'll try to iron out that papercut soon.

Glad to help. I'll let you know if I run into any other irrational behaviour on my weird audio adventures -- I'm like a human property based test with all the strange edge case states I push my system into.

Me too!

I am a level-zero dummy actually: please explain why would I need to launch clips instead of playing good old sequences of midi events, in the first place?

Cliplaunching is the perfect activity when you find yourself asking yourself the question "got anything to do for the next 4 to 7 hours?".

Parody reply there, but I think it encapsulates a little of the essence of the experience. It facilitates a more 'experiential' interaction with the music (every moment is perfection), compared to the 'linear' experience that is more cognitively intuitive to us (the cumulation of events achieves perfection).

If you listen to a few pieces by Debussy and/or Stravinsky and then Schubert and/or Beethoven, I think you could fairly imagine that Debussy and Stravinsky would prefer cliplaunching while Schubert and Beethoven would incline towards linear sequencing.

(I also think I've summarised about 200 years of European musical history in that last statement)

I’m still pretty new to the idea myself but I see these as two big advantages as someone who is still a DAW/timeline person:

Good points but I think your two points are actually in opposition. You'd be much more free to experiment in a linear timeline where every note is completely independent, whereas in the cliplaunch paradigm you'd have to maintain an awareness of side effects of edits across the entire work -- a potentially cognitively complex abstraction. The flip side of this is the efficiency and speed that you can apply changes across your entire timeline in the cliplaunch paradigm.

Fair point. For me the experimentation timeline shifts to being inside Atom rather than being on my DAW timeline. To reduce the cognitive complexity I group together musical sections using DAW clip functionality and then can benefit from DAW clip aliasing (in NS2, for example). I then not only remove redundancy from repeated MIDI notes but the clip launch configuration within a DAW clip is itself never repeated as all the linked DAW clips update themselves together.

At first I did have a problem with not seeing what the notes on the other tracks were playing as it makes composition more difficult but you can use the layers functionality of Atom to overlay the notes from other patterns to aid with composition. You also obviously lose the MIDI preview of your data in the DAW which is a helpful thing to have but this can be worked around by appropriate naming of your DAW clips.

I know it is obvious but I should mention that this way of working does not stop you from creating MIDI elsewhere and then bringing it in to this environment for arrangement into a larger song. For example, a drum sequence may come from Quantum and the melody and bass come from a score in StaffPad. As long as it ends up as MIDI then it can be imported and used as a pattern. Even if the music comes from other apps you still benefit from the flexible arrangement.

Abstraction is amazing and incredibly powerful when applied to any field -- I'm with you all the way on that.

The only reason that I'm not personally using Atom2 for musical pattern abstraction is that I'm using a much more powerful abstraction tool called 'TidalCycles' (powerful in that the limitations of a GUI are discarded in favour of a pure text interface, and the composability of text is highly flexible). I do pretty much exactly what you're describing on that abstraction layer, which then collapses its abstracted complexity down into Atom2 as a more 'literal' persistence layer.

Let it be said that I see this flexibility of how Atom2 is utilised as an absolute positive point and design triumph for the project.

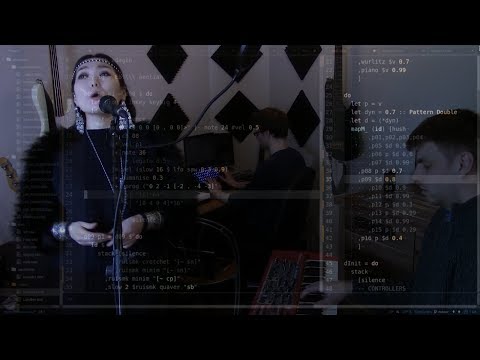

Here's a quick technical example of musical abstraction with a linear approach:

Here's a semi-structured-jam piece where I'm 'cliplaunching' in pretty much exactly the way that you've described with regard to Atom2:

As mentioned above, Atom2 gives me an independently controllable modular layer of persistence that can be separated from the abstraction layer and taken out to concerts where 'live coding' in real time might not be context-suitable (or where I want to perform a different instrument).

I could also theoretically facilitate the entire composition process with just Atom2 -- using the modular GUI interface to basically achieve the same thing (nonlinear abstraction layer collapsing down into a linear persistence layer).

I don't have any demos of working with Atom2 yet (apart from the bug report vids above) but I'll think about making some when I get the whole system flowing smoothly.

i wish i could run tidal on ios

Hahaha, I don't!

(although imagine being able to build modular MIDI and Audio processor AUs with it in the same way as we can build MIDI AU with Mozaic)

.

Moving post to main Atom thread so as not to derail the conversation.

Transposing/Quantizing Key/Scale in Atom2?

Edit.. Editing sequences.. etc. etc..

Fascinating, but not really what one expects in a “for Dummies” thread!