Loopy Pro: Create music, your way.

What is Loopy Pro? — Loopy Pro is a powerful, flexible, and intuitive live looper, sampler, clip launcher and DAW for iPhone and iPad. At its core, it allows you to record and layer sounds in real-time to create complex musical arrangements. But it doesn’t stop there—Loopy Pro offers advanced tools to customize your workflow, build dynamic performance setups, and create a seamless connection between instruments, effects, and external gear.

Use it for live looping, sequencing, arranging, mixing, and much more. Whether you're a live performer, a producer, or just experimenting with sound, Loopy Pro helps you take control of your creative process.

Download on the App StoreLoopy Pro is your all-in-one musical toolkit. Try it for free today.

Comments

IMO this is by far the most interesting phenomenon in all of science (there are several great books about it)

I don't think anyone has ever postulated that the experiment means we are living in a simulation, but it does point to a profound mystery at the heart of reality. We have no idea what it is though, although there are several hypotheses.

Here's a better video about IMO, from an actual physicist:

Could you point me towards an argument that supports this? Everything I've read on the subject has just been tech-bro bullshit. I've yet to see a convincing argument that we are in fact living in a simulation. (This probably comes across as testy, but I'm genuinely curious to read a good argument in favour of the idea, if you know of one).

I'm not sure I'll be able to find the articles I had read, but I'll see if I can. To be absolutely clear though they weren't out to prove that we are in a simulation by any means, and neither am I.

One well-presented article I found thought provoking explored the argument that the mathematical odds that we are in a simulation are as high or higher than that we are not. It wasn't intended to establish anything as a fact.

Another I enjoyed explored how readily we begin to adapt to alternate "realities" such as Zoom meetings - something that I could relate to since the pandemic. The extension of the thought was that if this level of adaptation can be achieved with our admittedly primitive technology, would it be such a leap for a more advanced entity to construct what we now perceive?

None of it claimed to be scientific. And I don't take it that way. However, I am absolutely convinced that we understand very little of what reality is. Challenging our assumptions is healthy.

it is not only possible

it is true.

but not in the way proposed in the topic...

we live already in a simulation made by rich (and all their instruments of power, ideologies, religion, etc)

over the poor. 3%'s simulation for the rest of 97%.

chances are - we are in the 97%...

this phylosophical quest of "do we live in a simulation?"

is just another intelectual diversion to make the mind wonder in

possible realms and not in the daily reality.

so you wont see the social sandbox/zoo you are locked.

to think upwards, not downwards - so to speak...

Nick Bostrom, who brought the idea of the simulation in the mainstream

is a very dangerous man. Just check his "longtermism" right wing movement

and you'll see why...

Against longtermism

It started as a fringe philosophical theory about humanity’s future.

It’s now richly funded and increasingly dangerous

https://aeon.co/essays/why-longtermism-is-the-worlds-most-dangerous-secular-credo

It's true. I've been to Slough. Years ago. Disaster Recovery test... and what a disaster to be told your recovery is to be in Slough.

Betjeman was ahead of his time:

www-cdr.stanford.edu/intuition/Slough.html

I’m very much enjoying the discussion here. Lots of input for me to think about.

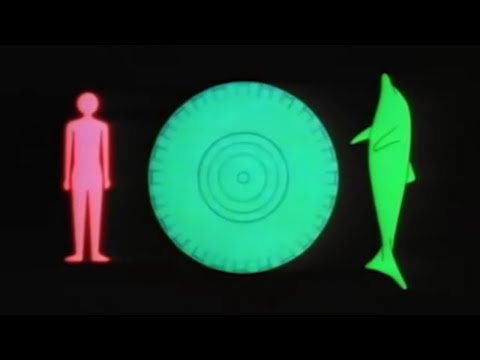

First of all it is so refreshing to see the Other back. Didn’t think I’d see one of these posts again. Secondly, I am open to the idea of this being a simulation. There were a many different clips I could have chosen but I think this one kind of sums it all up…

Ahhh ... now I remember. @richardyot - I can't find the articles, but I believe the author was someone named Douglas Adams. Thanks for jogging my memory @Mountain_Hamlet.

A lot of truth in your words here!

Hey, without Slough we might never have had 'The Office' 😂

I had a dream last night the transformers invaded us. When I tried to record them and play it back to show others, the footage was wiped.

What’s that?

Ah, wrong thread. My bad.

Anytime. I didn’t realise so much of the original is up on the tube thanks to the BBC.

This is spot on, the origins of the idea that we are living in a simulation comes from deluded tech-bros. Sam Bankman-Fried of FTX infamy was a prominent longtermist

Elon Musk and Peter Thiel are also into all this BS. They also believe in Roko's Basilisk and all the associated bullshit. It's all delusional fairy tales.

Yes, it basically gives them carte blanche to be selfish assholes who don't give a damn about anybody but themselves. Complete distraction from the stuff that really matters.

Thanks for putting us all in danger.

You have to understand that they are being selfish assholes for the good of humanity, and if you happen to get crushed under their wheels, well that's just the price you pay for human advancement

👿

IMO the whole concept of artificial intelligence is completely overhyped. As impressive as Midjourney and Chat GPT might be, we are absolutely not on the cusp of some kind of sentient hyper-intelligent AI. For starters we have no idea what sentience even is, we simply don't know what makes consciousness happen.

There's this notion that if you somehow create enough connections in a network it will somehow become self-aware, but there is zero evidence to back this up. It's not like a switch gets flipped once you add just one more connection. Why would a computer algorithm ever become self-aware? By what mechanism could this happen? We simply don't have the answers to these questions.

The problem is that we are easily fooled by what is essentially marketing. Chat GPT can spit out convincing-looking text, but it's simply condensing information found on the internet, that was created by humans. It is not in any way sentient, and will never be. It is a remarkably clever algorithm, but it's not alive.

Some worthwhile articles that might help put the hype into perspective (thanks to @espiegel123 for pointing them my way):

https://www.newyorker.com/tech/annals-of-technology/chatgpt-is-a-blurry-jpeg-of-the-web

https://www.newyorker.com/culture/annals-of-inquiry/why-computers-wont-make-themselves-smarter

richardyot:

Morozov explains the state of AI and the hype clear and short here:

The problem with artificial intelligence? It’s neither artificial nor intelligent

Evgeny Morozov

https://www.theguardian.com/commentisfree/2023/mar/30/artificial-intelligence-chatgpt-human-mind

also related to all of these

a good read:

Silicon Valley’s Crisis of Conscience | The New Yorker

https://www.newyorker.com/magazine/2019/08/26/silicon-valleys-crisis-of-conscience

also I'll spice it with an article about Nick Land from 2015...

There’s only really been one question, to be honest, that has guided everything I’ve been interested in for the last twenty years, which is: the teleological identity of capitalism and artificial intelligence. – Nick Land

https://socialecologies.wordpress.com/2015/08/28/nick-land-teleology-capitalism-and-artificial-intelligence/

@waka_x thanks, I'll read through these.

If you have no answer to question on [1] you can’t state [2] .. You can’t say “we don’t know if machines can have consciouaness but i am

pretty aure they will never have it” - you are contradicting tourself here.

Not arguing that our current understanding of self-awareness and consciousness in general is not sufficient. It may be even that whole idea of self-awareness is myth and even we are just highly effective optimisation systems with main goal to survive :-))

Until we do not have clear definition and understanding of how consciouaness emerges, discussions about AI and questioning at what point it becomes self-aware are wasting of time.

Also AI doesn’t need to be self-aware to be highly usefull (it already is, GPT based GitHub Copilot saves me daily around 3-6 hours of work), but also deadly dangerous.

And question if we live in simulation is just pseudoscience Here Sabine did nice video on this topic. I was big proponent of this topic, but i am not anymore. Wasting of time too..

Here Sabine did nice video on this topic. I was big proponent of this topic, but i am not anymore. Wasting of time too..

I am sure the pain I feel in my knee since my last tennis match is the consequence of a sadist IT guy who is responsible of the "pissing people off" department

Sabine is good. Will watch later

@richardyot / any one else looking for the original strong argument. Not only is it possible that we are living in a simulation, at a purely statistical level it is the most likely circumstance, as notable Oxford University philosopher Nick Bostrom famously proposed in the short, readable paper on the subject which put this idea into the mainstream:

https://www.simulation-argument.com/simulation.pdf

Update: I see @waka_x and @Gavinski beat me to it, and also highlighted a right wing aspect to all this. I’ll check the piece out, thanks! (Note to self: read the first page before commenting on the second!)

Update to the update: ok, now I have read the argument against longtermism. My non expert take is that it is a straw man argument comparing apples and oranges, by taking aspects of the philosophical concern for humanity en masse into an unimaginable future and yoking that framing to fears of neglecting near term concerns like climate change, principally because of the vast sums being thrown at the idea by some pretty unpleasant people, because aspects of it accord with their own particular hobby horses.

The argument then makes an unsupported leap to say concern for the distant future comes at the consequence of the immediate present. This is the argument always deployed against, for example, human space exploration. It’s a false dichotomy. There is no reason this need be a zero sum game.

Can I point out here that Brian Eno, not exactly your typical goose stepping Nazi, has also expressed an (for me, entirely worthy) interest in vast timescales, through his involvement in the Clock Of The Long Now project?

https://longnow.org/clock/

Btw, there’s even an app for that:

https://apps.apple.com/gb/app/longplayer/id959561382

A rather beautiful generative music app which will not repeat a sequence for 1000 years. Probably a bit longer than this iPad battery will last.

Such a philosophical perspective sure beats the extreme short termism of our average elected representative. (Usually measured in how many months to the next election.)

Setting aside the singular obsessions of arseholes like Thiel and other tech bros who are weaponising their own take on the idea doesn’t take away from the strength of the initial simulation argument, even if it comes from a right wing place. (Which I don’t think it has to.)

Just take a look at what is being achieved in Unreal Engine 5 now, less than a century after the invention of the computer, think of the progress already made on brain/computer interfaces and so on, and think what kinds of simulation might be possible 100, 1000, or 10000 years from now. For reference, this is footage from a forthcoming game:

The devs are having to persuade people this is actual in game footage, and not a mocked up live action shoot.

Would ‘sims’ inside a simulation that far down the road from us find it easy to realise that was what they were? And, if not, what are the odds that we are the original meat bags, and not some later iteration? Just purely at a statistical level - the nub of Bostrom’s argument - the chances of us being the ‘originals’ are vanishingly small. Not none, but very, very small…

I think fundamentally we agree - my point is that all the current hype around AI is based on a false premise: machine learning is impressive and potentially useful, but the hype that AI is going to become self-aware in the near future is clearly nonsense.

(just to clarify) I don't think this is a contradiction, it's merely pointing out that an algorithm that regurgitates information found on the internet is not sentient, it's just an algorithm. It might sound convincingly human, but it's still just code. I don't think any large language model is ever going to become sentient, it's just an algorithm and nothing more.

Nobody knows exactly what self-awareness (or consciousness) actually is or how it arises in (higher) animals, so it is impossible to either prove or rule out if an AI is self-aware.

However, all theories on how consciousness arises in the mammalian brain are compatible with artificial neural networks, i.e. the same processes could take place in ANNs. So, consequently, large ANNs could already be conscious, by most currently proposed definitions of consciousness.

Well I absolutely agree with your first point, and I've made it myself earlier in the thread.

The second point is speculation, since we just don't know what consciousness is, we have no idea if neural networks can ever achieve it. I think the claim that existing neural networks are already conscious is far-fetched, and highly implausible. 🤷♀️

There's several common misconceptions here.

Neural networks are not algorithms, neither are they code. In the case of GPT-3.5 for example, it's just a huge amount (hundreds of billions) of numbers that define abstract connections. Why exactly GPT is so good at what it does (i.e., nearly everything), nobody really knows. Nobody sat down with a code editor and wrote a program (yes there is a program that runs the neural network, but it's extremely simple).

It also doesn't "just regurgitate Information", more so than a human poet or a human writer or a human doctor does.

It depends on your definition of "regurgitation". On a fundamental level, the very definition of intelligence is "regurgitation of information in a smart way" 😉 no poet could write an English poem without first having learned tens of thousands of English words and having seen many other poems. So, all they're doing is regurgitate information.

Yes I agree it's all speculation. But I'm not sure why you categorically rule out consciousness in ANNs then. I think many people are subconsciously afraid of the prospect and thus rejecting it instinctively. Just like the idea of extraterrestrial life.